We cannot improve if we don't learn. We can't learn if we don't understand.

Jun 06, 2018-

"The divers were instructed by the DM to swim away from shore and then they were taken away down current and then spent the next 7 hours fighting for their survival in Xm high waves before being picked up some nine miles away..."

"How stupid could they be? It is obvious that they should have ignored the DM's instructions and swum to shore. That's what I would have done."

-

"The instructor had a double cell failure in the rebreather which meant that the voting logic gave them erroneous information in terms do of the pO2 within the breathing loop. They carried on their dive despite numerous warnings provided by the controller that there was an issue. Unfortunately, the voting logic meant that the solenoid was instructed to fire and the diver suffered from an oxygen toxicity seizure and drowned. The three cells were subsequently found out to be 17, 40 and 40 months old."

"It's obvious that he should have aborted dive when he had the warning. How stupid could he be? I'd never make that mistake."

These relate to two real adverse events. In the former no-one died but in the latter, the instructor perished despite being recovered from depth by their students who were undertaking an entry-level (Mod 1) closed circuit rebreather class. The incidents themselves are almost irrelevant to the main theme of this article because this article is going to focus on the way the diving community often makes comments which do nothing to improving learning. The reason being that we need to encourage a culture of being able to talk about the failures we make, and most importantly, why it made sense to us to behave in the manner we did. Without this dialogue, we are not going to be able to prevent future adverse events because we need the story, not just the statistics of the number of people who die or get injured during SCUBA diving or apnea.

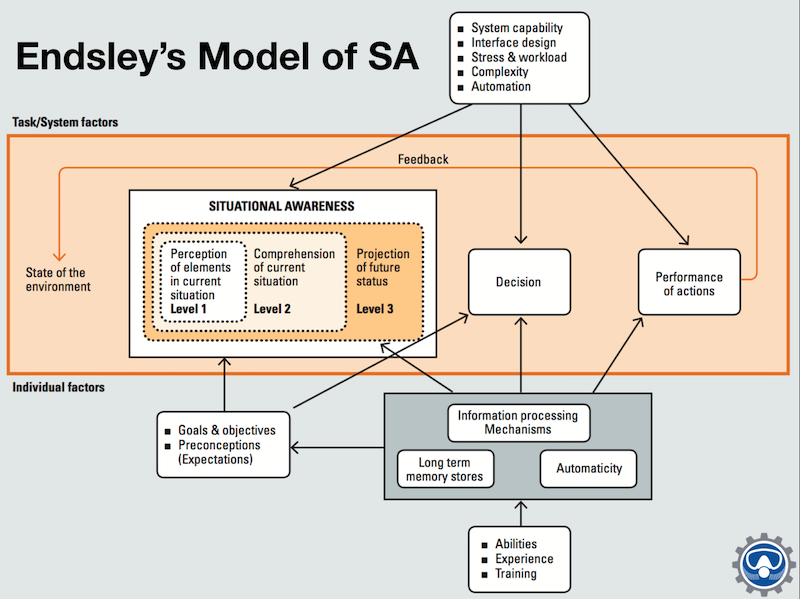

Unless we are in an extremely depressed state, the majority of divers do not get up in the morning and decide "Today is a good day to die." Conversely divers, in the main, are trying to do the best they can with the resources they have available, be that time, money, people or equipment or a combination of these. The decisions we make are influenced by what we notice going on around us, how that perception matches with previous models or experiences and their subsequent outcomes, and what we think is going to happen next. This continual cycle of Notice >> Think >> Anticipate is at the core of Mica Endsley's Model of Situational Awareness shown below. Once we have developed an understanding of the situation, we then make a decision, execute the action linked to the decision, wait for an outcome and then carry on, all the time updating our library of experiences and long-term memory store using the feedback loop shown at the top of their diagram.

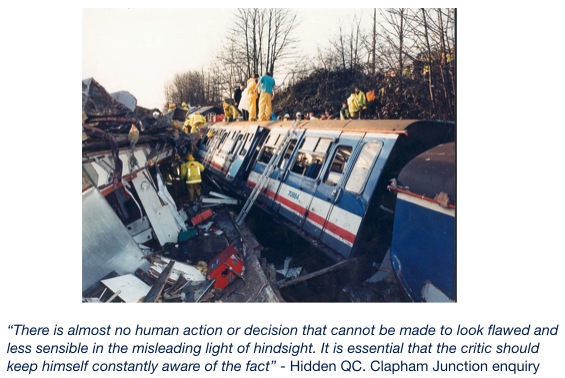

The problem when we discuss incidents after the event is that we have additional information which would reduce the uncertainty faced by those involved in the incident itself to almost zero. Fundamentally, we have a crucial piece that those involved didn't have - we know the actual outcome, the 100% outcome, rather than a 'risk of an adverse outcome' and as a consequence, we are now being influenced by both outcome and hindsight bias.

Remember that those involved at the time are subject to "What you see/have is all there is" and despite observers often commenting that it was 'obvious' or it is 'common sense' that this would happen, unless you have experienced such an event, then you are unlikely to have that 'common sense'. Besides, if it was that obvious, don't you think those involved would have carried on regardless?

If you don't believe me, think back to when you were a child and burned yourself because you touched something hot, despite your parents saying "Don't touch that, it's hot!". Or something more relevant to diving, learning buoyancy control.

Buoyancy Control - Learning from Feedback

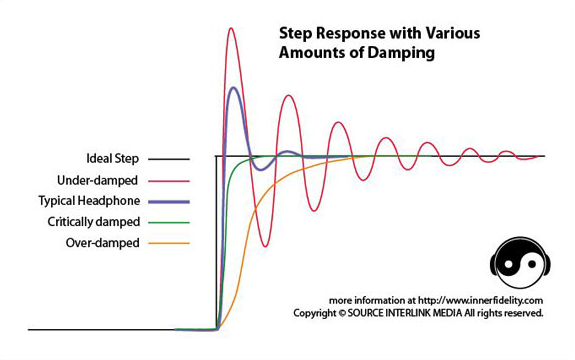

The instructor (hopefully) told you about the need to only use small corrections in terms of gas in/gas out of the buoyancy sources (drysuit or BCD/wing). The more you injected, the faster you ascended, which meant that if you arrived at your stop, you had to let out more. If you over-compensated because of the increased pressure in the buoyancy device, you sank and potentially ended up in a pendulum state alternating between too much positive and too little buoyancy. I certainly remember this happening on my Advanced Nitrox and Decompression Procedures class where it took me and my buddy something like 15 mins to ascend from 21m (should have been 6!) because we were ascending mid-water without a dSMB as a reference line and were referencing off each other. Very embarrassing!!

The optimal action is to keep the deviations as small as possible within the operating limits and using a feedback loop to maintain that minimum deviation. The image below shows how different amounts of damping or feedback can improve the rate at which an activity closes to the ideal line.

As we learn to do something new we require a feedback loop if we are to improve otherwise we don't know how to bring the system back under control. That feedback loop might be a friend or an instructor. However, the feedback needs to be honest and not just platitudes!!

Fortunately, or unfortunately depending on how you look at it, adverse events rarely happen in diving which means that we don't get direct experience of bad things happening. As a consequence, our library of long-term memories is often devoid of experiences to refer to. Even more important is the fact that we don't have the pre-cursors or trigger information which led to the adverse event occurring in the first place, so we can't even spot them developing. Saying someone has poor situational awareness is flawed if they don't know what to focus on in terms of gathering the information to base the decision on.

This 'brilliant' circular argument came from a marine accident report (Dekker's Field Guide to Human Error).

"The ship crashed."

"Why?"

"Because the crew lost situational awareness."

"How do you know they lost situational awareness?"

"Because the ship crashed."

As you can see, without understanding why it made sense to the crew to make the decisions they did, we cannot improve things. Just substitute diver for the crew, and crash as a rapid ascent to make it more relevant to your own environment.

"The problem you think you have probably isn't the problem you've got."

For example, whilst you might know that a rapid ascent is bad and should be avoided, unfortunately, you don't know what to look for to prevent the ascent rate being too quick to control until it is too late. Unless you sit down with someone and talk through the event, you may not be able to understand what those triggers were with a view to looking for them thereby influencing your situational awareness. Worse still, you could decide on the wrong course of action because you have decided on something intuitive rather than evidence-based.

This approach is perfectly natural because when we don't have a real experience to refer to, we pick the closest 'model' in our long-term memory and try to make it fit. For example, those who have had rapid ascents from depth often think that adding more weight is the way to prevent future rapid ascents. Unfortunately, in the majority of cases, it is the excess weight that has caused the problem because there is now a larger bubble of gas that will expand and need to be controlled during the ascent compared to a correctly weighted diver. This means the control needs to be better and more timely, with more gas dumped at the correct depths which introduce an additional level of skill required. So despite knowing that rapid ascents are bad, you are none the wiser as to how to solve the issue.

The problem with being negatively critical of adverse events is that the social backlash is often seen as a punishment for talking about normal human behaviour. Normal human behaviour that is often called 'human error'. Unfortunately 'human error' is really only possible to define or attribute after the event. The same can be said of 'complacency'. To operate at the pace we do in the modern world we need to make mental shortcuts all the time. These are known as heuristics.

"It looked like one of those, therefore it is one of those".

The majority of the time these heuristics work and there is a positive outcome (or a non- negative outcome) and this is often known as 'efficiency'. Remember that just because you didn't get injured or killed, it doesn't mean it was 'safe'. However, sometimes our interpretation of the real world is flawed, therefore the model we are using is incorrect and the subsequent mental shortcuts we take end up with an adverse event. This is then often known as 'complacency'! We are judging based on the known outcome.

To improve matters we have to understand what the drivers of the decision making were, fundamentally what shaped their situational awareness and decision-making processes and why did it make sense to behave in the manner they did. This means we need to ask questions in a positive frame or create the environment where people can provide the whole story. It might be that they didn't have enough time, their training was inadequate, the dive centre was pushed for cash and couldn't provide the requisite number of staff and so on. If divers cannot feel they can tell the truth for fear of being castigated in public, then only part of the story will be told, the part which potentially misses out crucial facts. Continual failures at the sharp end are not normally the problem of those at the sharp end, they are indicative of system-level problems which may include organisations, supervisors or dive centres.

When divers post personal accounts, the responses are normally a little more controlled. However, when third party reports are posted, normally it isn't so positive. Therefore, the next time someone posts an accident report about a third party, think through the questions "Why would it have made sense for those involved to behave in the manner they did?" and "What drivers were they likely to have been pressured by to behave in the manner they did? not "How could they have been so stupid?" or "Look, another Darwin Award winner". We need to encourage a Just Culture so that divers (and all professions really) are able to talk about failure candidly, free in the knowledge that feedback will be provided in a positive frame rather than berating them for being stupid...

If you liked this post, and think others would benefit from learning about the topic, feel free to post and share to your walls, groups and club sites. I am not precious about where my posts go...and if they end up in the bin, please tell me why so I can make the next one better.

Gareth Lock is the owner of The Human Diver, a niche company focused on educating and developing divers, instructors and related teams to be high-performing. If you'd like to deepen your diving experience, consider taking the online introduction course which will change your attitude towards diving because safety is your perception, visit the website.

Want to learn more about this article or have questions? Contact us.