Why ‘Human Error’ is a poor term if we are to improve diving safety

Feb 06, 2018This blog was originally submitted as an article for the NAUI ICUE Journal following my presentation at the Long Beach show in 2017.

The aim of this manuscript is to enable the reader to recognise that often when we read accident and incident reports the cause of outcome is reported as ‘human error’ and yet this is such a simplistic and reductionist approach which adds very little when it comes to improving diving safety. As a consequence, the article will explain that we need to understand the context and sense-making which is present with any adverse event and determine why it made sense to those involved to make the decisions they made. Fundamentally, divers or instructors do not aim to injure or kill themselves on a dive. If, using hindsight, the risks are perceived to be so obvious, why didn’t those involve see them and prevent the accident from happening?

The paper will explain some of the theory behind human error and in the process describing a couple of case studies to show that whilst the simplification to ‘human error’ is easy, it belies the complexity of the messy and ambiguous real world in which we dive (and live). It will then highlight ways in which the diving community can improve their performance and safety.

In 2012, an experienced scientific diver was undertaking a training dive to 110ft with a buddy who had less than 20 rebreather dives after completing her certification. The plan was relatively simple with a low-workload but after nearly 20 minutes at depth, the subject diver had a seizure caused by oxygen toxicity when his breathing loop pO2 was more than 3.0. The unconscious diver was sent to the surface as the rescuer was losing control of the ascent. Once on the surface, they were recovered by the surface team and given CPR, first aid, and evacuated to a chamber where they made a full recovery. The simplistic reason for this near-death experience was that the diver in question was using a rebreather with cells which were 33 months old and they had missed the cautions which were displayed by the rebreather on the heads-up display and the handset. However, as will be explained later in the paper, the reality was far more complex than that.

‘Human Error’ in Diving

Reviewing the literature it can be seen that ‘diver error’ is present in research papers and textbooks. Part of the reason the author believes this to be the case is that there is no taxonomy that allows human factors or a deeper understanding of the reasons for the accident to determined. In Bierens ‘Drowning: Prevent, Reduce and Treatment’ (section 175.2.6) (1) he states “A thorough investigation usually reveals a critical error in judgement, the diver going beyond his or her level of training and experience, or a violation of generally accepted safe diving practices. In other words, the root cause is most commonly ‘diver error’”. In Denoble’s paper presented at the 2014 Medical Examination of Diving Fatalities conference (2), he states that “Fatality investigation is conducted by legal authorities focused on a single case. The main purpose is the attribution of legal responsibility and this determines how the causation is established. In most cases, the inquiry ends with establishing the proximal cause of death.” In aviation, this attitude shifted in the 1980s from ‘the pilot was last to touch it, therefore it was his fault’ towards looking at systemic issues, including human factors. In 2008, Denoble, Caruso et al (3) published their research which determined the triggers, disabling agent/action, disabling injury and cause of death of 947 diving fatalities from the DAN database. Speaking with Denoble in 2016, he stated that this classification process was recognised as being limiting but a pragmatic approach was needed and they needed to draw a line somewhere. This is understood. However, there needs to be more work down to understand why 41% of the 947 divers who died in their study due to insufficient gas. Most of the time such a situation is totally preventable, but telling people not to run out won’t solve the problem, any more than telling people the paint is wet, or the stove is hot.

What is ‘Human Error’

One of the problems encountered is that ‘human error’ can have a number of different meanings. Hollnagel (4) describes these as:

- Sense #1 – error as the cause of failure: ‘This event was due to human error.’ The assumption is that error is some basic category or type of human behaviour that precedes and generates a failure. It leads to variations on the myth that safety is protecting the system and stakeholders from erratic, unreliable people. “You can’t fix stupid”.

- Sense #2 – error as the failure itself, i.e. the consequences that flow from an event: “The choice of dive location was an error” In this sense the term ‘error’ simply asserts that the outcome was bad producing negative consequences (e.g. caused injuries to the diver).

- Sense #3 – error as a process, or more precisely, departures from the ‘good’ process. Here, the sense of error is of deviation from a standard, that is a model of what is good practice, but the difficulty is there are different models of what is the process that should be followed. In diving, given the vast variance in standards and skills, what standard is applicable, how standards should be described, and most importantly, what does it mean when deviations from the standards do not result in bad outcomes.

Consequently, depending on the model adopted, very different views of ‘error’ can result.

Reason’s Model of Human Error

Professor James Reason in his book ‘Human Error’ (5) described the ‘Swiss Cheese Model’ as a way of explaining how errors occurred, with the layers in the model being barriers or defences which are there to prevent the accident from occurring. These layers were entitled ‘Organisational Influence’, ‘Unsafe Supervision’, ‘Latent Failure’ and ‘Unsafe Acts’. ‘Unsafe acts’ covered both errors and violations and could be considered the ‘last straw which broke the camel’s back’. Each of these layers described could have holes in them, in effect gaps in the defences which we put in place to prevent an adverse event. These holes are present because as humans we are all fallible and we often miss things or don’t understand the complex interactions which are possible. In most cases, these mistakes or errors don’t matter that much because they are picked up before the situation is critical. However, in some cases, the holes in the cheese line up and an accident happens. The diagram below shows this, but there is a need to recognise that this simple 2D static model doesn’t replicate the real world in which the holes move and change size so the ability to detect the failures is much harder, especially given the limitations of the human brain and the biases we are subject to.

Animated Swiss Cheese Model from Human In the System

As many researchers have shown, continual failures at the ‘sharp end’ often means that there are systemic issues at hand, including physical hardware design. This fact was known in the Second World War when B-17 bomber pilots were continually raising their landing gear instead of raising the flaps whilst taxying in. Selecting the gear up whilst taxying would cause the aircraft to crash. No amount of training could resolve the issue across the fleet. However, they invited Fitts, a psychologist, and his team to see what the pilots were doing and they noticed that both of the levers to raise the landing gear and the flaps were the same shape and size and next to each other on the control panel. He suggested that they be spatially separated and the gear handle have a wheel put on it so it was obvious it was the gear. The result, no more accidents!

Violations - A Special Sort of Error

At the bottom of Reason’s model within the ‘Unsafe Acts’ section, there are 4 headings - slips, lapses, errors and violations. Violations were defined as the operator (diver) being responsible for actively creating the problem by ignoring or willfully breaking the rules. However, modern safety research has shown that violations are pretty normal and that workers are trying to achieve the best outcome given the pressures they are under and it would be better to change the system rather than include more rules. Hudson showed that 78% of workers in an offshore environment had broken the rules or wouldn’t have a problem breaking them if the opportunity arose and only 22% had never broken rules and wouldn’t do so. Phipps et al (6), identified in the anaesthetic domain that doctors would break rules but that it would depend on:

- The Rule: How credible it was, who owned (wrote) it, what would happen it the rule was broken (punishment) and how clear the rule was.

- The Anaesthetist: What their risk perception was like, how experienced and proficient they were, and what the professional group norm was.

- The Organisational Culture/Situational Factors: How much time pressure were they under, how limited they were in terms of resources, the design of equipment and how many concurrent tasks were being managed.

In terms of diving, there are few formal rules which need to be followed and much of the adherence is based on risk perception, social or peer pressures to conform and time or financial pressures. If divers have broken ‘rules’ and something adverse has happened, it is better to look at why the diver did what they did rather than immediately judge and call them stupid for breaking the rules.

Hindsight Bias

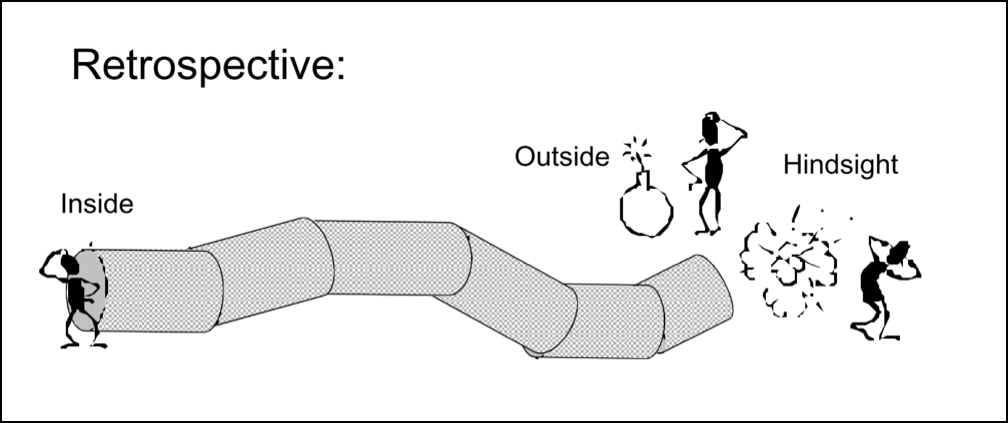

There are a couple of biases which divers are all subject to when it comes to incidents and accidents and how they are perceived in terms of causality and contributory factors. These are hindsight bias and outcome bias. A couple of examples of hindsight bias are shown in the cartoon images from Sidney Dekker’s ‘A Field Guide to Human Error’ (7). In terms of the retrospective view, this provides a different perspective to the person involved in the sequence of events. When looking from the outside and with hindsight, an observer has knowledge of the outcome and the dangers involved. However, from the inside, the diver has neither. Dekker’s use of tubes highlights the limited visibility about what is going on due to focused attention and limited Situational Awareness.

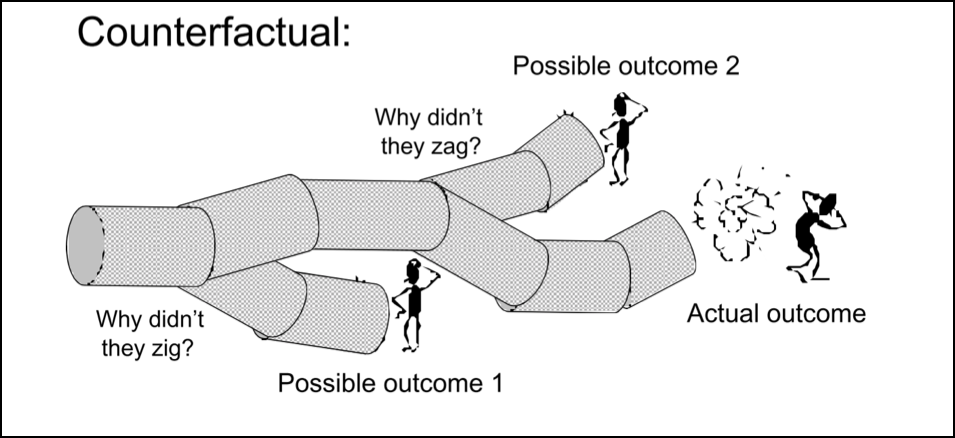

Another take on this is the counter-factual approach to explaining an accident or incident. This is where people go back through a sequence of events and wonder why others missed the opportunities to direct events away from the eventual outcome. Whilst this can explain where the diver went wrong, it does not explain failure.

Hindsight bias is something that everyone involved in looking at diving accidents and incidents should be aware of. Not least because as Hidden QC, the barrister who lead the report examining the Clapham Junction train crash in the UK, where 35 people died and more than 450 people were injured said, “There is almost no human action or decision that cannot be made to look flawed and less sensible in the misleading light of hindsight. It is essential that the critic should keep himself constantly aware of the fact”

Situational Awareness

The model below was created by Mica Endsley in 1988 (8) and shows how situational awareness is not just the here and now as described by her “Situational awareness is the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning and a projection of their status in the near future.” but that individual factors and task/system factors can have an influence as to what is ‘relevant’ or what can happen to reduce situational awareness. Something to consider is that situational awareness is what it is for the diver and to say that situational awareness has been lost misses the point that there is a finite resource when it comes to attention and if something grabs the attention of the diver or is perceived to be more important, then the brain will drop the ‘apparently’ lesser important activity and focus on the new one. Unfortunately it is only after the event when something has gone wrong that it is possible to determine if the element which was ‘dropped’ was actually a relevant element or one which should have been monitored more closely. Again, the reduction of an accident or incident to ‘loss of situational awareness’ is flawed as it is really just another term for ‘human error’ - there is a need to understand why the prioritisation of elements was perceived to be correct for the activity at hand.

Decision Making - All models are wrong, but some are useful

Our situational awareness and decision making processes are influenced by mental models which are created based on experiences. Those experiences can be direct where the diver has physically encountered the activity or subject, or via learning materials or telling stories. The ease by which it is possible to recall those models is influenced by a number of cognitive biases as well as the emotional significance attached to the event when it was experienced and encoded in the long-term memory. Of note, those events with higher emotional significance will be recalled more easily which in itself biases our decision-making process. Gary Klein, one of the world leading researchers on decision making, produced the simple model below which comes from ‘Streetlights and Shadows’ (9) and explains this cyclic process.

A point to note is that experts have more models with which to create these subconscious mental simulations and they can determine which are the more relevant elements (cues/patterns) to look for in a dynamic and ambiguous scene than novices. This is why they make better and faster decisions. In the context of diving, this is why it is essential that skills and experience are developed outside the training environment to expose the diver to the uncertain and ambiguous environment we dive in - the training system cannot teach every situation and every answer.

Being a deviant is normal

Over time the mental models that are created drift from the standards which are taught or should be adhered to. As the performance drifts, new baselines or models as to what is ‘correct’ are created. In diving, there is a lack of standards about what is ‘right’ and, in the main, no formal process to reassess performance after a period of time. Consequently, this drift is normally only picked up when something major goes wrong, or divers have ‘safe, scary’ moments which identify their deviance. This regular and accepted resetting of new baselines is known as ‘Normalisation of Deviance’ and came from the investigation by Diane Vaughan into the Challenger Shuttle disaster in which she identified this problem with NASA at an organisational level. The systemic migration to a more risky environment is a natural tendency, something which was identified by Amalberti (10). A simplified and adapted model of this progression is shown in the diagram immediately below.

In the second diagram, adapted from work by Cook and Rasmussen (11) it can be seen that normal operations stitch between ‘safe’ and ‘unsafe’ but as deviations become ‘normal’ a new baseline is set. Operations continue here but at some point, something happens and the activity moves over the ‘unacceptable performance boundary’ and an accident happens. The difficulty is that it is not possible to determine where that boundary is, or what specific combination of factors is needed to combine to cause the adverse event.

Brief Analysis of The Oxygen Toxicity Event

As a review, the diver in question suffered a seizure due to high partial pressures of oxygen in their breathing loop (pO2 was more than 3.0) and the cells in the rebreather were 33 months old.

Now to the context. The team who had this event used two sorts of rebreathers. The most prevalent, when serviced would come back with new oxygen cells fitted. However, the rebreather in question had gone back to its manufacturer in the July for a service and did not have its cells replaced with new ones. The reason being that the manufacturer was struggling with the reliability of oxygen cells and customers were complaining about losing dives. As a consequence, the manufacturer decided to return units with customer’s cells fitted but would run a test on them to see if they performed to specification and provided a service note to the customer about changing the cells. The team received the unit back, noted the cells performed 100% but missed the piece about replacing them. The unit went on the shelf for 4 months. The day before the dive in question, the unit was dived and performed without an issue. This was the first time it had been dived post-service and there was likely an assumption was made that the cells were in date as no-one had noted the ‘change cells’ comment from the manufacturer. The checklist they were using at the time did not state to check the cell dates.

On the day of the incident, the diver and his buddy entered the water. They descended to approximately 100ft to carry out their dive. After 9 mins there was a caution displayed on the handset along with a HUD indication. The diver looked down and could not reconcile what the error meant ('cell millivolt (mV) error') as they had not seen it before. The HUD colour indicated a caution and not a warning (RED) so carried on. After 2 mins the HUD colour changed back to normal as the system was designed to do. What was happening was that two cells had failed and were now current-limited. The rebreather uses a voting logic which is simplified to 2 cells reading the same take primacy over a single cell, even if the 2 are incorrect - the system cannot know which is correct. The 2 cells which had failed had failed at a pO2 of approximately 1.0. This meant that no matter how much oxygen was injected into the loop, the system believed it was below the set point of 1.3, and would keep injecting oxygen into the loop taking the real pO2 higher and higher. The only indications to the diver that this would be the case would be to check the mV readings on the handset which should happen, but divers are believed not to do this that regularly. At 19:30 mins into the dive, the loop pO2 was 3.02 and the third cell was now failing - it is believed that the loop pO2 reached a maximum of 4.1 using extrapolated data. At approximately 26:50, the diver suffered a seizure and was rescued. The rescue and extraction of the divers to the chamber and medical facilities went without a flaw and was commended in the subsequent investigation.

Given that the aim of the paper is to look at human error in context, a review of the why it made sense for the diver to do what they did, the context and factors will now be revisited.

The rebreather had come back from the manufacturer with a clean bill of health saying the sensors were performing 100% of specification. However, the team missed the comment about changing the cells. Consequently, their mental model was that the cells had been changed based on their experiences of the other manufacturer which was a more prevalent rebreather in the team. The unit went into the dive locker for four months before being used again. The unit was dived the day before and performed as expected. They did not check the cell dates because there was no checklist which asked for this and the unit had come back from the manufacturer only four months prior - both cases of biases which are normal. On the dive in question, the diver had noticed the HUD caution and handset information, but not seen this specific error before during their training (it is not possible to simulate it) and because it was a caution, not a warning, they did not believe it to be that serious and when it went away, they presumed the fault was transient. The diver was likely relatively task loaded having not dived a rebreather for a while and they were also looking at after an inexperienced diver, so didn’t check the handset as often as they should have done (hindsight bias on my part). The HUD didn’t give any indication that something was wrong because the voting logic was working as designed. As a result, the diver made an assumption, based on previous experiences which informed their mental models, that everything was ok when in fact it was heading for a disaster if nothing was done.

The key theme to this paper is about learning from events, positive or negative. So what happened with this team to make improvements and learn from failure?

- The manufacturer recognised the human-machine interface issue and escalated this specific caution to a warning in the system and changed the wording on the handset to something more relevant.

- The manufacturer ceased sending units back with cells fitted and at the same time found a more reliable supplier.

- The team went back to basics in terms of how they managed risk and rewriting many of their procedures in the process.

- They also reinforced their life support and rescue skills as this was shown to be crucial in not having a fatality during the event.

- Finally, the team have also undertaken three training sessions with the author to develop their non-technical / human factors skills with a view to becoming a higher performing team which includes developing a learning and just culture so that the team can talk about mistakes more easily.

Summary

A key point to recognise from this paper (blog) is that human error is normal and that accidents and incidents will happen, no matter what operators, supervisors and organisations try to do to mitigate them. Consequently, there is a need to take a different view of safety, something which can be summarised by this quote

“Safety is not the absence of accidents or incidents, but rather the presence of defences and barriers and the ability of the system to fail safely.” - Todd Conklin

There is an urgent need to change our attitudes if diving safety is to be improved, blaming people for being ‘stupid’ is not going to make a difference to safety. In fact, it could be argued that it is having a detrimental effect because failures are not being talked about and therefore the real risk which is being faced is not known. The author believes that the investigation and discussion of fatalities is not the most effective way of improving diving safety, especially when litigation is about pinning the blame on one party or another and therefore the context and real decision making is often missing especially when it comes to violations and rule-breaking. Furthermore, in many cases, the person who knows how it made sense to make the decisions they did is dead. Therefore, there is a need to create the culture within the communities and organisations to be able to recognize human error for what it is and allow those who have made mistakes, errors and violations to talk about them, free from the fear of social or legal retribution. This is known as a ‘Just Culture’ and one of the reasons why aviation is as safe as it is.

This paper will close with a quote from a chapter called ‘Mistaking Error’ (12) from the Patient Safety Handbook by Woods and Cook to highlight the complexity of the situation so that when something does go wrong, look deeper than ‘human error’.

“…in practice, the study of error is the nothing more or less than study of the psychology and sociology of causal attribution…Error is not a fixed category of scientific analysis. It is not an objective, stable state of the world. Instead, it arises from the interaction between the world and the people who create, run and benefit (or suffer) from human systems for human purposes.” - author’s emphasis.

References:

1. Bierens, J. Handbook on drowning: Prevention, rescue, treatment. 50, (2006).

2. Denoble, P. J. Medical Examination of Diving Fatalities Symposium: Investigation of Diving Fatalities for Medical Examiners and Diving. (2014).

3. Denoble, PJ, Caruso, JL, de Dear, GL, Pieper, CF & Vann, RD. Common causes of open-circuit recreational diving fatalities. Undersea Hyperb Med 35, 393–406 (2008).

4. Parry, G. W. Human reliability analysis—context and control By Erik Hollnagel, Academic Press, 1993, ISBN 0-12-352658-2. Reliability Engineering & System Safety 99–101 (1996). doi:10.1016/0951-8320(96)00023-3

5. Reason, J. T. Human Error. (Cambridge University Press, 1990).

6. Phipps, D. L. et al. Identifying violation-provoking conditions in a healthcare setting. Ergonomics 51, 1625–1642 (2008).

7. Dekker, S. The Field Guide to Understanding Human Error. 205–214 (2013). doi:10.1201/9781315239675-20

8. Endsley, MR. Toward a theory of situation awareness in dynamic systems. Human Factors: The Journal of the Human Factors and Ergonomics Society 37, 32–64 (1995).

9. Klein, GA. Streetlights and shadows: Searching for the keys to adaptive decision making. (2011).

10. Amalberti, R, Vincent, C, Auroy, Y & de Maurice, S. G. Violations and migrations in health care: a framework for understanding and management. Quality & safety in health care 15 Suppl 1, i66–71 (2006).

11. Cook, R & Rasmussen, J. ‘Going solid’: a model of system dynamics and consequences for patient safety. Quality & safety in health care 14, 130–134 (2005).

12. Woods, DD & Cook, RI. Mistaking Error. Patient Safety Handbook 1–14 (2003).

Gareth Lock is the owner of The Human Diver, a niche company focused on educating and developing divers, instructors and related teams to be high-performing. If you'd like to deepen your diving experience, consider taking the online introduction course which will change your attitude towards diving because safety is your perception, visit the website.

Want to learn more about this article or have questions? Contact us.