"Known Unknowns" - Are they considered enough in diving...?

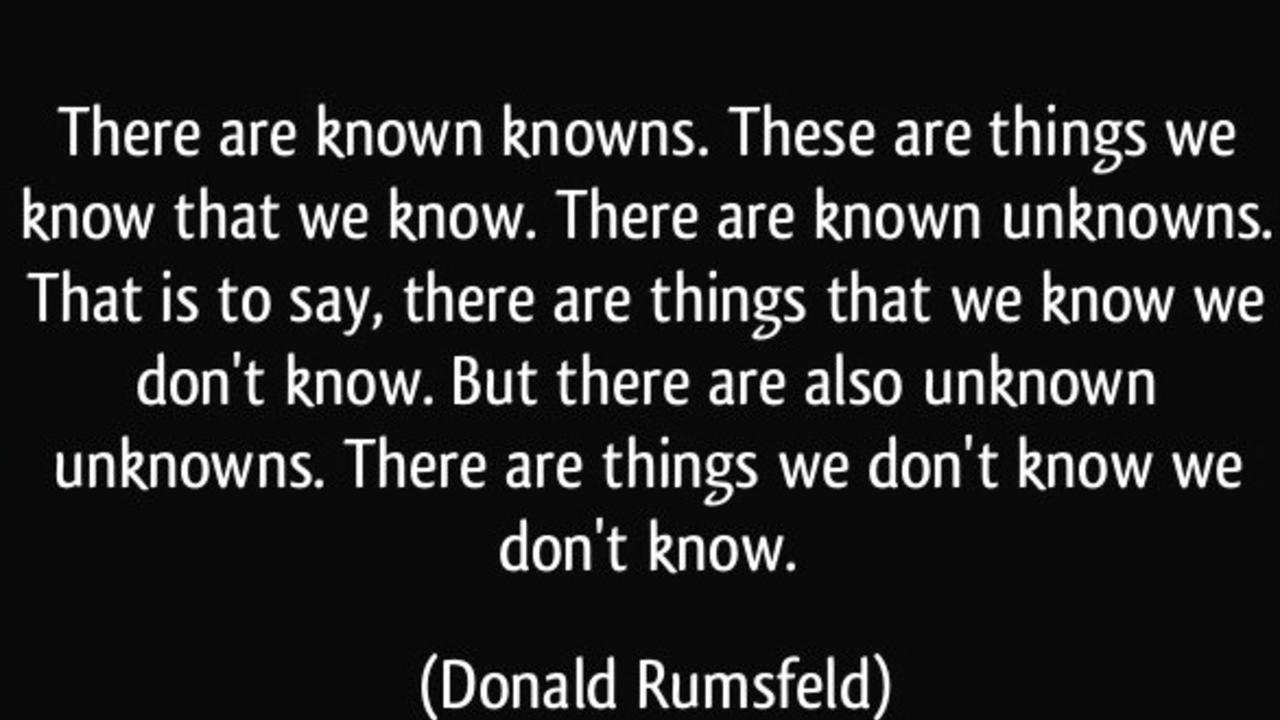

Jan 12, 2017On February 12, 2002 Donald Rumsfeld, whilst talking about the lack of evidence linking the government of Iraq with the supply of weapons of mass destruction to terrorist groups stated:

Reports that say that something hasn't happened are always interesting to me, because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns – the ones we don't know we don't know. And if one looks throughout the history of our country and other free countries, it is the latter category that tend to be the difficult ones.

and whilst he got significant negagtive media interest from this, the concept wasn't new and refers to a reflective model of self-performance and interaction called Johari's window. It also now being used more in risk management to identify and mitgate the risks which are faced.

One of the more apparent concepts that has come to the fore as I have done my research and delivered training is that the concept of risk within the diving community is very much personal and context driven. This might appear to be obvious but it has a massive impact on diving safety for exactly the reason of the "unknown unknowns".

In a previous blog I talked about the Dunning-Kruger effect - a concept explaining that we don't know what we don't know, and even worse we don't know that we don't know! This inability to identify the risks we face and therefore how to deal with them is based on previous experiences. For example, an advanced trimix diver with plenty of real world conditions and equipment failures would think nothing of a free-flowing back-gas regulator, and if this happened at depth they would shutdown the offending regulator, but an OW diver who is in a cold water quarry having a free-flow for the very first time might have a very different perspective, panic and shoot to the surface.

Many of the risks, failures and adverse events in diving are known about, they are the 'known knowns'. For example gas consumption rates, buoyancy control and basic marine life interactions or simple failures such as a free-flowing regulator or lost mask. These are addressed through 'rules', procedures, processes and skills development and allow divers to apply skills-based or rules-based decision making skills to solve problems or situations which will be encountered. In highly trained individuals, the error rate for skills-based decisions is in the order 1:10 000, for rules-based decision-making, this rises to around 1:100. (High Reliability Ops).

The next set of risks encountered are the 'known unknowns', these are the risks that we know WILL happen to someone at some point but we just don't know when. These could be considered 'Normal Accidents', a term coined by Charles Perrow. An example of this might be a diver who had a plan which did not consider failure, where poor communication meant that not everyone understood what was going on, they unforutnately run out of gas because they are task loaded, and rather than go to their buddy, they bolted to the surface where they have a massive DCS event and died. Such events are 'complicated' in nature rather than complex. By this I mean that each of the steps can be broken down and a solution provided, and reassmbling the system will still make it work. However, the glue to make it work effectively is the application of non-technical skills (situational awareness, decision-making, communications, teamwork/co-operation and understanding performance shaping factors) They are solved through knowledge-based decision-making through the application of non-technical skills. This process means that these adverse events are less likely to occur in the first place, and in the event that the system does fail, recovery is more likely and safer. Error rates for knowledge-based decision making are in the order of 1:2-1:10 (James Reason) and normally occur due to a mismatch between the sensory information, the goals/drivers being applied and the previous experience of the individuals/team involved.

The final set of risks are the unknown unknowns; in this case we are talking about very rare events. In diving an example of this could be Parker Turner's cave collapse where he and a buddy were invovled in a collapse of the cave system whilst inside it. Bill Gavin, the buddy, made it out, but unfortunately Parker died. For an event to sit in this category, it can't have happened before or thought that it could, otherwise it would sit in the 'unknown knows'. It also can't be disassembled and reassmebled in exactly the same way given the number of moving parts. This is often the case when a number of people are involved an incident and the combinatory effects cause emergent situations. Another example might be the Aquarius project fatality when an underwater pneumatic drill caused a unique series of buttons to be pressed on the AP Inspiration which caused the unit to shut down leading to a hypoxic situation and the diver died. The diver should have followed protocols to check their handset regularly but didn't. By definition, there are no rules or skills to directly deal with this situation, otherwise it would be in the known unknowns. Therefore, to deal with unknown unknowns, both technical and non-technical skills are required along with robust problem solving, potentially thinking around the problem, not through it to create a new solution to something that hasn't been encountered before. Importantly, the way that the community learns from this and moves it to the unknown known section is by incident reporting and analysis.

The following graphic shows these three separate class of events. Of note, because it is easy to mentally and physically solve the known known issues, this is where most of the effort is expended. It is harder to prepare ourselves for unknown known situations because whilst we know they could happen, unless we can recall them easily (availability heuristic), they are unlikely to be at the forefront of our preparations.

Knowledge-based decision making is based on previous experiences, directly or indirectly. Experts can make better (more effective and reliable) decisions because they have more experiences to refer to. They also understand their cognitive weakness, the biases which they face and try to work around them with effective teamwork and communication skills.

And herein lies the rub for inexperienced divers - they don't know the 'known unknowns' because they haven't been exposed to certain situations due to the limitations of their training and potentially the limitations of their instructors. Often they do not have expertise in the skills needed, therefore when faced with a situation they haven't come across before, they move rapidly through skills (don't have them or make an error executing them), to "is there a rule I need to follow" (can't remember) and then onto knowledge-based decision making whereby they have to try and make the pattern (solution) fit what they consider to be the best given the information they have. Often it works out because the exposure has been managed effectively (primarily depth and limited decompression exposure), however, when these have not been managed well, serious injury or fatality occur.

So, if you at least start to understand what you don't know and the impact it might have on your ability to deal with the known unknowns, you can start to develop the skills needed to operate in the top two-thirds of the diagram above. That development process includes both your technical skills and your non-technical ones.

Gareth Lock is the owner of The Human Diver, a niche company focused on educating and developing divers, instructors and related teams to be high-performing. If you'd like to deepen your diving experience, consider taking the online introduction course which will change your attitude towards diving because safety is your perception, visit the website.

Want to learn more about this article or have questions? Contact us.