Normalisation of Deviance: It's not about rule-breaking

Jul 23, 2022“As we sat ready to get into the water for a normoxic trimix dive in 50m, I looked down on my 21m stage and realised that I hadn’t analysed the gas in it that morning. The date on the analysis tape was from yesterday. We had a strict approach to analysing gas each day. No analysis, no diving. I quickly asked the deck crew for an analyser. She came back, I put the analyser on the valve, and let the gas flow. The analysis only took a few seconds, it was 51.3%. Back on with the 1st stage and a few minutes later I was stepping off the boat with my team to start the dive.”

Did I need to do that check? It was the same stage I had analysed the day before. The technical answer is no. The social and cultural answer is yes. Deviance is a social construct, not a technical one. The standards we had within our team were there to hold each other, and myself, to account. To be able to say to one another, “This is what we do around here, follow the standards.”, a culture of mutual accountability. Some might think that such rule-following is about blind compliance and is cult-like behaviour. However, my perception is that it is about following the standards because I want to because I know I am fallible. It is like pilots who follow checklists to ensure their landing gear is down. They too know they are fallible and so hold each other to account to follow the checks as they are written. Importantly, by having a clear standard within the team or organisation, drift is less likely. However, it does require a psychologically safe environment to call the other person out (and expect to be called out yourself). Psychological safety is the basis for effective teamwork.

This blog is going to dig into the concept of Normalisation of Deviance and explain that it isn’t about rule-breaking but rather it is a social construct and therefore if we want to reduce the normalisation of deviance, we must focus on the social aspects, not the technical ones, of rule-breaking and rule-following.

A chunk of phosphorous on a wreck in Shetland! (Not something you'd want to bring back on board!)

Hazards, Risks and Uncertainty

This section might appear to be going off on a tangent, but it is fundamental to deviation and its normalisation. Much of what we do in diving is about risk management because diving takes place in an inherently hazardous environment. The primary hazards we face while diving are hypoxia, hyperoxia, hypercapnia, arterial gas embolism/gas expansion injuries and trauma. These primary hazards can be encountered by drowning, entanglement, rapid ascent, scrubber breakthrough/missing scrubber material, incorrect/unknown breathing gas for the depth, running out of gas along with many other things.

A hazard is something that causes physical harm to us or someone else. They are not risks. A risk is a probability that something will happen that allows the hazard to materialise and cause harm to us. Risk is made up of probability combined with the consequence e.g., the risk of a fatality while diving is in the order of 1:200 000 dives. The problem for us is that mentally 1:200 000 is a very small number, and it applies to the wider population of divers, not just me. Although technically I am one of that population, several cognitive biases/heuristics (mental shortcuts) mean that I don’t necessarily accept that that one might actually be me!

Managing Risk

To ensure risks are kept at an acceptable level, professional risk managers have four ways of managing risk: treat, tolerate, terminate, or transfer.

- Treat. Controls are used to reduce the likelihood that the hazard will be encountered, and mitigations are used to reduce the impact/severity WHEN the hazard is encountered e.g., gas planning and training to effectively monitor gas consumption while diving are controls, whereas gas sharing and drop bottles are ways in which the risk of hypoxia (due to running out of gas) can be mitigated.

- Tolerate. This is where the residual level of risk after ‘treatment’ is acceptable, it might not mean zero e.g., we plan for one catastrophic failure on a dive because otherwise ‘excessive’ gas reserves are needed, especially when it comes to deeper dives. Note, we could still end up with a serious injury or fatality. Different people will have different levels of risk tolerance or risk acceptance based on their experiences, training, mindset, and environment.

- Terminate. This is where we end the dive because the risk has become too great for the linked reward. ‘Terminate’ could happen before the dive team enters the water as weather conditions have deteriorated to a level which means a surface rescue would be considered too difficult, or a piece of equipment fails on the dive e.g., a regulator is bubbling excessively, and the dive is called. “Anyone can thumb a dive at any time for any reason” is the mantra that supports this, although a lack of psychological safety and sunk costs doesn’t make this easy.

- Transfer. This is where someone else ‘owns’ the residual risk after you have applied some form of treatment. It normally takes the form of insurance or waivers. In the diving industry, much of the residual risk in a training environment is transferred to the dive centres and instructors to manage i.e., if something goes wrong, it is their issue to resolve, not the agency or manufacturer. In a ‘fun-diving’ environment, some of the risks can’t really be transferred although medical insurance transfers the costs of the chamber or other medical support to the insurance company.

Note, that no matter how you manage the risk, the hazard still remains. Unless you don’t dive, you can still encounter the hazards, no matter how many pieces of paper you sign. What the paperwork does is transfer the (financial) mitigation to an insurance company.

Uncertainty Management, not Risk Management

Most divers are not professional risk managers. Furthermore, because they do not have the numerical data to make effective decisions, they manage uncertainty, not risk. Uncertainty is mostly managed by cognitive biases, heuristics, and emotions.

- If something doesn’t end up as a bad outcome, it must have been a good decision (outcome bias).

- I don’t think it will apply to me because I am different to that diver (distancing through differencing).

- I haven’t heard about that sort of thing happening, or if I have, it was a long time ago. Therefore, it doesn’t really exist or applies to me (recall and recency biases).

- It will be alright, even though things look marginal (optimism bias).

- No one else sees an issue with this hazard, therefore I won’t say anything as it must be okay (social conformance).

- If I end this dive now, I am going to let others down (peer pressure).

- I have invested time and money in this dive trip, and even though I know I have an issue with my equipment, I am going to dive anyway (sunk-cost fallacy).

Finally, as diving is a social activity, we need to recognise that many of the biases we use to manage uncertainty are based on social norms.

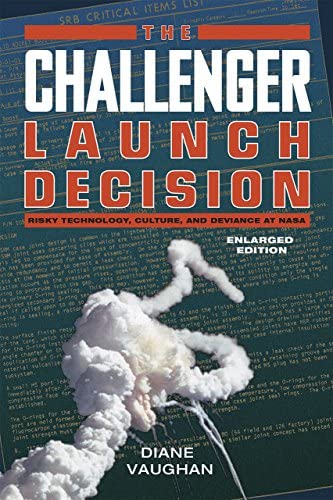

Challenger and NASA – Normalisation of Deviance

The term ‘Normalisation of Deviance’ was coined by Diane Vaughan in her book, ‘The Challenger Launch Decision’ where she describes in detail how NASA and Morton Thiokol didn’t break any rules, but rather managed risks using engineering judgement to move from an original baseline focused on ‘why should we launch?’ to one which was shaped around the concept of ‘why shouldn’t we launch?’ The organisations gradually socialised the increased risks they were taking as being normal. In their eyes at the time, they weren’t being unsafe.

She defined a Normalisation of Deviance as a process where a clearly unsafe practice (in hindsight) comes to be considered normal if it does not immediately cause a catastrophe:

"a long incubation period [before a final disaster] with early warning signs that were either misinterpreted, ignored or missed completely" and

“the gradual process through which unacceptable practice or standards become acceptable. As the deviant behaviour is repeated without catastrophic results, it becomes the social norm for the organisation.”

Fundamentally, no moral compass pointed in the wrong direction toward unsafe decisions. The engineers and managers used their professional judgement in risky situations to make sense of the problems they faced and come up with ‘safe’ solutions. The engineers and managers had previously stopped launches when they considered the situation to be unsafe on numerous occasions. However, regarding the o-ring issue, they had normalised the risk because, over time, nothing had gone wrong despite evidence to show the risk was credible.

What about speeding as an example of Normalisation of Deviance?

If Normalisation of Deviance isn’t about rule-breaking, why is this example used? The reason is that it isn’t the rule-breaking that is being looked at, it is the social acceptance of the rule-breaking. Once multiple people break the speed limit, it is easier to conform to the ‘illegal’ norm than it is to comply and follow the rules. Humans are wired to conform to social norms because it had evolutionary benefits. Stay with the tribe inside the thorn bushes or be cast out to where the wild animals are on the savannah? In Aberdeen, a city based around the oil and gas industry, pedestrians are more likely to use pedestrian crossings due to the culture of offshore workers following rules in high-risk environments compared to Glasgow where they don't have the same culture!

Diving and Normalisation of Deviance

Now that we know that the original concept of Normalisation of Deviance doesn’t refer to rule-breaking, how does it apply to diving?

First off, what does ‘safe’ mean in the context of diving? Remember, diving takes place in an inherently hazardous environment. Other than an absence of fatalities, there isn’t anything obvious, and therefore ‘safe’ becomes a social construct based on the absence of ‘adverse events’. We should note that some groups are more risk averse than others and would include near misses and non-compliance of ‘rules’ as an adverse event as there has been a reduction in safety margins.

The ‘rules’ that are used to create ‘safety’ in diving rarely have any legal precedence, rather they are socially constructed as being ‘good’ or ‘best’ practice. Who decides what ‘good’ or ‘best’ practice is? By having grey areas, it makes it harder to hold others to account, and therefore drift is more likely.

Drift at the level of the diving industry

The following are examples at the industry level:

- Continued reduction in safety margins e.g., fewer hours to start the instructor development process.

- Acceptance that minimums become targets for the experience.

- The conflict between sales and marketing to make courses accessible and competitive and the training/safety departments pushing back as the hazards still remain.

- Socially accepted that courses are cheap, wages are low, and standards are not where they should be, and yet nothing is done at the industry level to address this as it would impact revenue generation.

- The RSTC and similar have limited influence to increase standards as the membership would inherit the more stringent requirements thereby reducing revenue e.g., a UK HSE report from 2011 on CCR safety strongly recommended that HF training be included as part of CCR training, but nothing has been done to incorporate it.

Drift at the level of the training organisations/agencies

- Agency’s inability to consistently deal with instructors who are drifting and do not appear to want to come back to the agency standards because that specific instructor generates significant revenue or kudos for the agency.

- Quality control is reactive rather than proactive, as it responds to serious events rather than looking for what makes it hard for instructors/dive centres to do their job well or addressing why drift is happening in the first place.

- Having minimum or no limits between certification courses to ensure skill consolidation and competency development.

Drift at the individual/team level

The following will be more obvious examples of the Normalisation of Deviance. However, we should recognise that these aren’t just individual actions, the Normalisation of Deviance is about the social acceptance of these activities and an inability to challenge the drift.

- Reduction in gas minimums to maximise bottom time.

- Progressing quickly through the training system without building experience.

- Increased depth well beyond qualification/certification limits.

- Penetrating wrecks/caves without guidelines being in place.

- Reducing decompression safety margins and getting out of the water more quickly.

- Purchasing ‘shiny stuff’ as a solution for gaps in skills and competencies.

We should recognise that these individual issues often have their roots at the higher levels described above.

How do we address the Normalisation of Deviance?

It is all very well pointing out the problems, but how do we solve them? First off, recognise that Normalisation of Deviance isn’t about the rule-breaking per se, it is about the social acceptance of the drift that is happening. The lack of clear performance-based standards across the industry means it is harder to hold people, centres, and organisations to account when they drift. Past successes are no guarantee of future success.

The lack of psychological safety inside agencies and across the community makes it harder to challenge those who are not conforming to standards. This includes senior instructors, ITs/IEs or CDs. Just because an individual holds a position of ‘seniority’ within the industry, it doesn’t make them immune from drift. If you are role-modelling sub-standard behaviours, then others WILL emulate you because of social conformance. Create and support an environment where challenge is rewarded.

Developing leaders within the industry is critical. Genuine leaders can influence others without the need to use authority. Effective leaders build relationships and understand what ‘work as done’ is really like, not assume that compliance is the only option and if instructors appear to be compliant, then all is well. Effective leaders will also support those who demonstrate constructive dissent – those who find holes in the system from a position of improvement not from a position of malice.

Supporting a Just Culture to understand how it made sense for a near miss, incident, or accident to happen. Accidents (in the main) don’t happen as one-offs. They have a history. There will be signals or signs in the past that have highlighted where the gaps are, gaps that have been socially accepted over time.

The bottom line is that we should be proactive, rather than reactive, because preventing a Normalisation of Deviance is easier than correcting it.

Gareth Lock is the owner of The Human Diver, a niche company focused on educating and developing divers, instructors and related teams to be high-performing. If you'd like to deepen your diving experience, consider taking the online introduction course which will change your attitude towards diving because safety is your perception, visit the website.

Want to learn more about this article or have questions? Contact us.