The Backfire Effect: Why our brains make it difficult to change our mind

Mar 27, 2024Last week I spoke about cognitive dissonance, when you have 2 conflicting thoughts in your head. I mentioned the backfire effect, so this week I’d like to dig a little deeper into that.

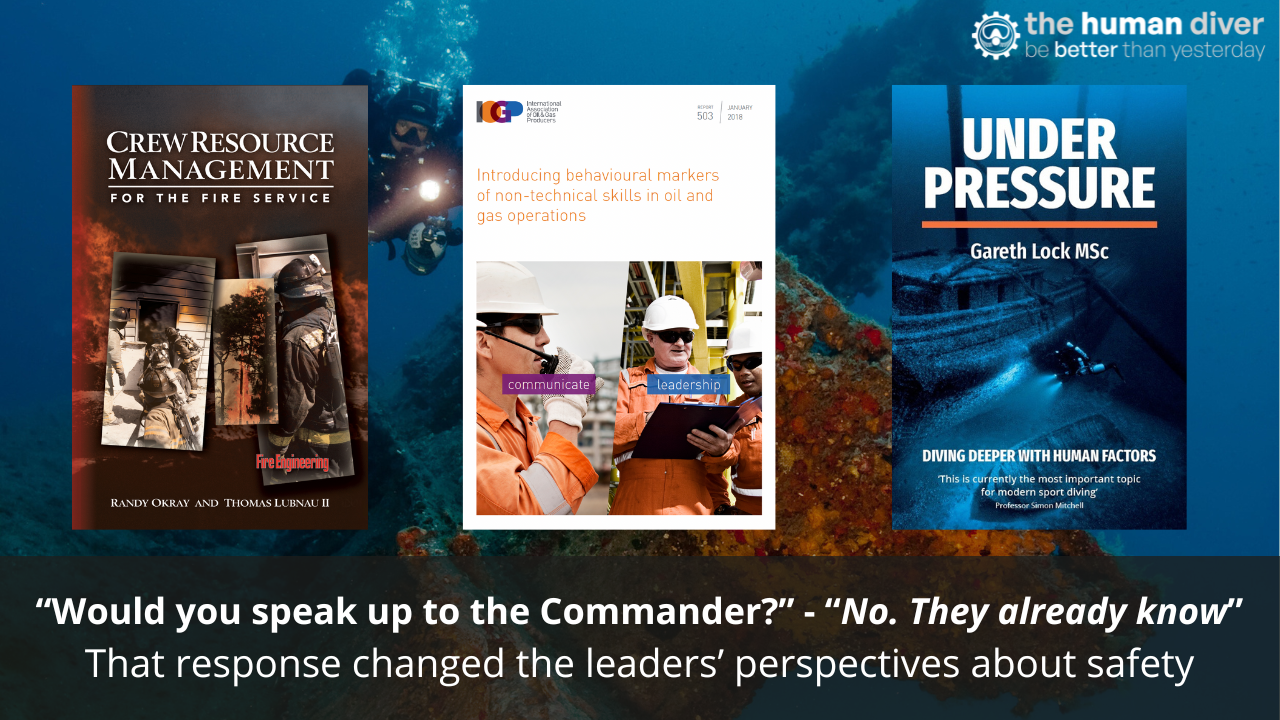

The backfire effect is sometimes called belief perseverance. It occurs when some information is shared that contradicts a core belief. As we saw last week, the brain tries to protect itself from conflict. We can do this by changing the belief, or by changing our actions. However, sometimes the brain will go in the opposite direction, and if it’s a particularly strong belief (ie a long held or deeply ingrained one), then there’s a chance that you will outright reject the information and strengthen your current belief. This is because of the way we analyse information. When we hear something that isn’t very important, from a previously reliable source then we tend to accept it. But if that information is counter to something we strongly believe, we tend to analyse it more thoroughly. We do this by comparing it to some of the following criteria; Compatibility, Coherence, Credibility, Consensus and Evidence.

We can look at these five questions in two different ways, either analytically (system 2 thinking) or intuitively (system 1 thinking).

If approaching them using analytical thinking we might ask the following:

Compatibility: Is this compatible with knowledge retrieved from memory or obtained from trusted sources?

Coherence: Do the elements fit together in a logical way? Do the conclusions follow from what is presented?

Credibility: Does the source have the relevant expertise? Does the source have a vested interest? Is the source trustworthy?

Consensus: What do my friends say? What do the opinion polls say?

Evidence: Is there supportive evidence in peer-reviewed scientific articles or credible news reports? Do I remember relevant evidence?

The problem is that when faced with a conflict of a core belief, our brain doesn’t always allow us to react in an analytical way. Instead it gives a “knee-jerk”, emotional, intuitive reaction. This means we tend to go by feelings rather than thoughts. Which gives us these very different questions:

Compatibility: Does this make me stumble or does it flow smoothly?

Coherence: Does this make me stumble or does it flow smoothly?

Credibility: Does the source feel familiar and trustworthy?

Consensus: Does it feel familiar?

Evidence: Does some evidence easily come to mind?

Bear in mind that we rarely consider all five questions. So if something “feels” wrong, it’s easier to accept that it is wrong than to take the time to think about it, analyse it and perhaps change our belief.

In the early 1990’s nitrox was considered the “devil’s gas”. It was known to be dangerous. And yet some crazy people were still experimenting with it. When it came to convincing others that in actual fact it was safe to use, the pioneers would use logical, rational arguments backed up with science. However that wasn’t enough for most people, as they had been told that it was dangerous by other experts. For them it failed all except the final question: it was incompatible with what they knew (both analytically and intuitively), it was difficult to process/didn’t flow, it wasn’t from “my” sources (friends, instructors, colleagues etc), and it goes against common knowledge. Even if the evidence was strong, it would be difficult to convince someone who is reacting emotionally. Let’s try an experiment.

A current contentious issue is vaccination. Whichever side of the discussion you’re on, have a think about some of the arguments from the opposing side. For example:

“Vaccines cause autism” or

“Vaccines don’t cause autism” or

“Mandatory vaccination is a violation of my rights” or

“Vaccination is safe and the right thing to do to gain herd immunity”.

Pick one of those that you disagree with. How do you feel about it? The likelihood is that it will make you feel uncomfortable. But if you start looking online for arguments to support that opposing view, and analyse them using system two thinking, there’s a chance you can start to understand the other side. And this is exactly how we can counter the backfire effect. People who are asked to deliberately research the opposing argument can be helped to understand it enough to accept it. Many conspiracy theorists have realised they are wrong when they have done exactly this (ironically, often in an attempt to bolster their previously held belief and be able to argue with people on the ‘other side’ better!). This also falls in line with current research about the echo chamber that social media tends to turn into, repeating discussions we agree with back to us while hiding us from the opposite sides arguments.

Why does this matter to us as divers?

Things change. Research, equipment, methods. Unless we can be open to change and able to look at things analytically rather than emotionally we will find it difficult to keep up. I often use the examples of BCD vs backplate and wing, short hose vs long hose or learning neutrally buoyant vs on the knees. Even little things like the half turn back on a tank valve can turn into a highly contentious issue. If you want to keep an open mind and make sure you haven’t fallen foul of the backfire effect, try looking into the opposing argument and analysing it, rather than letting your emotions rule.

Further reading:

https://www.themarginalian.org/2014/05/13/backfire-effect-mcraney/

Making the truth stick & the myths fade: Lessons from cognitive psychology

When (fake) news feels true: Intuitions of truth and the acceptance and correction of misinformation

The Prevalence of Backfire Effects After the Correction of Misinformation

Correcting the unknown: Negated corrections may increase belief in misinformation

Jenny is a full-time technical diving instructor and safety diver. Prior to diving, she worked in outdoor education for 10 years teaching rock climbing, white water kayaking and canoeing, sailing, skiing, caving and cycling, among other sports. Her interest in team development started with outdoor education, using it as a tool to help people learn more about communication, planning and teamwork.

Since 2009 she has lived in Dahab, Egypt teaching SCUBA diving. She is now a technical instructor trainer for TDI, advanced trimix instructor, advanced mixed gas CCR diver and helitrox CCR instructor.

Jenny has supported a number of deep dives as part of H2O divers dive team and works as a safety diver in the media industry.

If you'd like to deepen your diving experience, consider taking the online introduction course which will change your attitude towards diving because safety is your perception, visit the website.

Want to learn more about this article or have questions? Contact us.