When the holes line up...

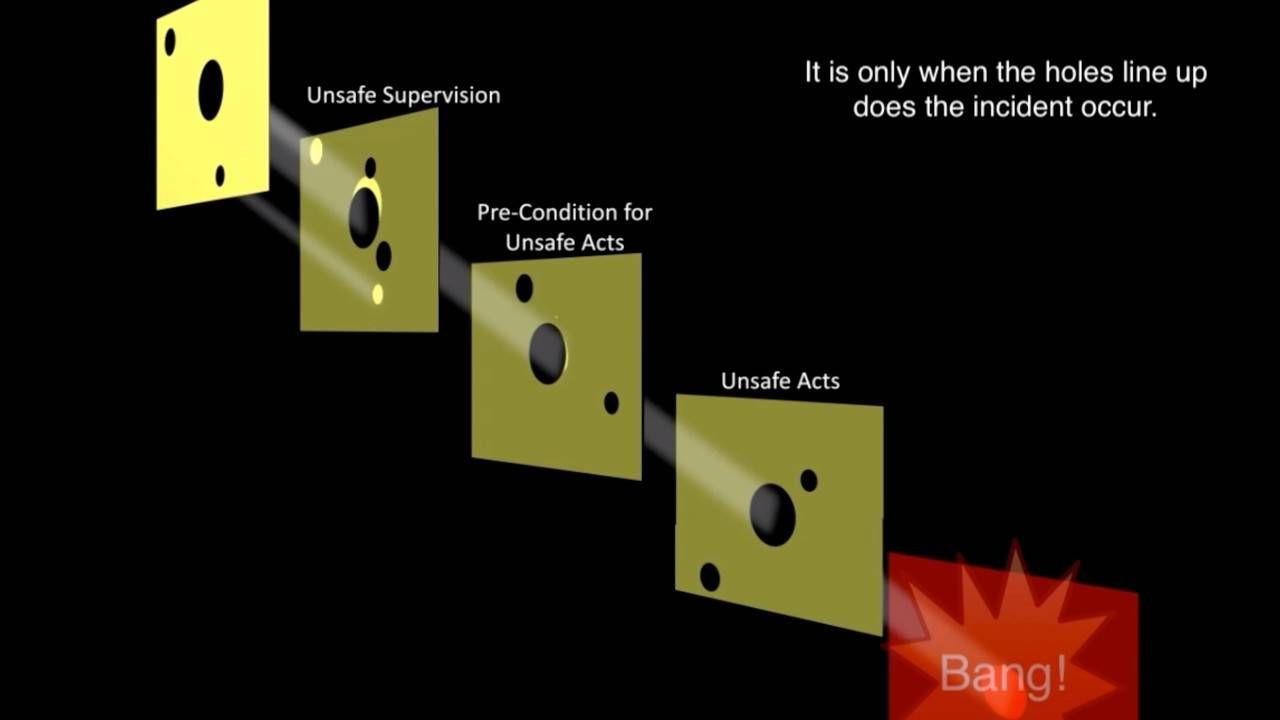

Many of my readers will have heard about me talk about Professor James Reason's Swiss Cheese Model and how it can be used to show how incident develop because of holes in the barriers and defences which are put in place to maximise safety.

Professor Reason's research showed that at different levels within a system, there are different barriers or defences present. e.g. organisational, supervisor and individual. However, these defences can have holes in them because the organisations, supervisors and operators are all fallible and therefore the defences cannot be perfect.

- At the Organisational level, these failures might be poor organisational culture, inadequate diver and instructor training programmes, flawed equipment certification systems e.g. CE or ISO, or misunderstood/misused reward or punishment systems e.g. QC/QA or certificates for the number of certifications completed.

- At the Supervisor level, these gaps might be inadequate supervision dealing with inexperienced divers/DMs/guides, inappropriately planned diving operations e.g. excessive numbers in marginal conditions, or failure to correct known problems e.g. equipment continually failing or staff not suitable trained, or supervisory violations e.g. planning to put DSDs in a cavern zone or not providing numbers of staff to meet standards because 'it was ok the last time'.

- At the individual level these failures could be split into latent issues/pre-conditions for error and unsafe acts or active failures.

- Latent factors: e.g. environmental factors, mental/physiological state of divers/instructors or personnel factors such as inadequate non-technical skills or lack of competence in technical skills.

- Active factors: Decisions errors, Skills-based errors, Perceptual errors and violations which can take multiple forms.

This model has often been shown as a static image with the holes dotted around the place and a shaft of light or beam passing through the holes and an accident occurring on the right-hand side.

The problem with such a model is that the world isn't static and that the holes in the defences move around as humans dynamically create safety by adapting to their surroundings, fixing things that are broken and creating relationships that didn't exist before.

This is why I created the following animation which shows that the holes move around. They also open and close but the person I asked to create this animation said it would be difficult to do that! Notwithstanding this, you can see there is a significant difference between a static image and a dynamic one.

The Simple Swiss Cheese Model

But even this model is flawed because even though the holes are moving, there is a linearity i.e. this caused that which caused the next thing to happen. The typical chain reaction which is described in much of the incident/accident paperwork. Identify the link in the chain which is critical, stop it from breaking and the accident won't happen.

Something critical which we need to consider is that we are making assumptions all the time to operate in our world. A number of those assumptions are based on the fact that we believe those further up the chain (or around us) will not make any mistakes (and if they do, they will be easy to spot) and that those further down the chain will also not make any mistakes and will correct them if we make a mistake. We also assume that if our organisation or supervisors ask us to do something, then what they are asking is valid and safe.

Safety is an emergent property of a system

Modern safety research has shown that safety does not reside in a piece of equipment, or a training system, or in a person. Rather, it is an emergent property of the system and it requires multiple factors, people, equipment and the environment to work together to create safety. Furthermore, errors and violations happen all the time, but most of them don't end up as an accident or incident, or even a near-miss.

I believe that 'unsafety' happens when there is a critical mass of failures/errors/violations and they combine in just the right (wrong!) way and the accident happens. It is for this reason that I created the following two animations.

The 'Big Hole' Model

The first is titled 'big hole' and refers to the fact that even though small errors have happened, it is only when a major error or violation happens that the adverse event actually happens. Two diving examples:,

- Not analysing the breathing gas before getting in the water and the gas in the deco cylinder is 100% oxygen and not 32% as expected. The planned and actual gas switch happens at 30m which equates to a pO2 of 4.0. The diver suffers an oxygen seizure at depth and drowns.

- The diver does not open their single cylinder valve properly on a dive which requires a negative entry (no air in the BCD, drysuit is emptied of gas too) and a fast descent to the seabed because of high currents. The diver rolls off the boat, starts their rapid descent, has no air in the BCD and cannot breathe, they cannot establish buoyancy as they can't reach their valve and their drysuit is compressing them which compounds the matter. By the time their buddies get to them, they are at 30m and have drowned.

This is signified by the 'big holes' lining up within the system and creating an 'obvious' failure point.

The 'Little Hole' Model

This is why incidents and accidents are so frustrating! Our human mind is quite simple in that we would like to have a simple and reductionist view of the world. Give me the root cause of the accident and then I can make sure I won't make that mistake. If I get bent and you can identify that one factor e.g. GF, hydration levels, gas mixes etc, then I won't get bent. The problem is, the real world doesn't operate like that. There are always multiple factors involved, many of them predictable, but it is only in hindsight do we see how they add up and reach a critical mass before the adverse event happens. This is why attention to detail and fixing the little things as they come up.

We often hear, "Don't sweat the small stuff" and yet it is the aggregation of small stuff that creates major problems. Some of you who are reading this are used to using risk matrices to see if something is high risk or not and whether the task should go ahead. If it is red, you stop, amber make sure controls are in the place and try to move the mitigated risk to the 'green zone'. Have you ever considered how many 'green' risks make a 'red' risk?

I recently wrote a blog for X-Ray Magazine which was titled 'Human error exposes the latent lethality within the system' which highlighted that as humans we dynamically create safety but when we err, we allow the lethality to propagate through to a lethal conclusion.

We love to simplify things...

Models are just an approximation of reality and they will always be wrong at some level. The closer the model is to reality, the more complex it is and the harder it is to get the concepts across to more people.

Incidents and accidents are caused when multiple failures due to systemic issues and variability of human performance happen. We are all fallible, irrespective of our experience. 'Experts' are able to predict when errors are more likely to happen therefore prevent them, but when they do happen, they are able to work out quickly what to do.

As novices, we can learn how errors happen and focus on error producing conditions and not just the errors. Errors are outcomes. The following list gives you an idea of what these conditions look like.

Sharing these videos

I am more than happy for readers to share these videos (and this post) as long as they refer back to the sites https://www.thehumandiver.com (diving related) and http://www.humaninthesystem.co.uk (non-diving related) when they do so.

Gareth Lock is the owner of The Human Diver, a niche company focused on educating and developing divers, instructors and related teams to be high-performing. If you'd like to deepen your diving experience, consider taking the online introduction course which will change your attitude towards diving because safety is your perception, visit the website.