‘Pilot error’. Don't 'fix' the Pilot. ‘Diver error’. 'Fix' the diver.

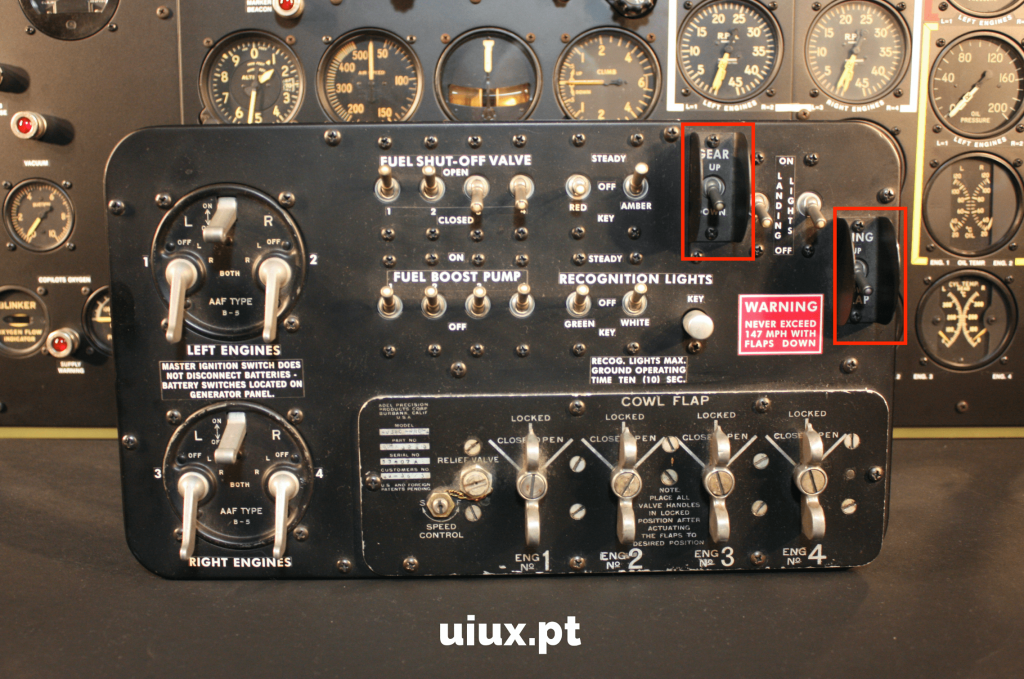

May 14, 2022During the Second World War, American bomber pilots kept on raising the landing gear on their B-17 bombers instead of raising the flaps as they taxied in from bombing raids. The regular response after these accidents was that the pilots should pay more attention, and if they couldn’t, they needed more training. The problem was that more training wasn’t fixing the issue, and it wasn’t until a psychologist named Alfonso Chapanis, recognised what was going on in the cockpit and realised that it wasn’t a human issue, it was a system issue, that things changed. The problem was that the location of the controls to raise the flaps were right next to the controls to raise the landing gear, and they were identical in shape too! Given that these missions were somewhat stressful and often undertaken at night so visual cues were limited, it shouldn’t be a surprise that mistakes were happening regularly even when the pilots were told to make sure they made the correct selection. The error-producing conditions that were present made it easier to select the wrong switch than to select the correct one. The solution was to move the switches spatially, and more importantly, they changed the feedback the pilots got when they felt the control with their fingers. The landing gear now had a wheel on it, and the flaps control had a small lever, and so they got immediate feedback that they’d placed their hands in the wrong place. The issue rarely happened again.

For this change to happen, there had to be a shift in mindset to realise that no matter what they did, the pilots were more likely to make a mistake in the current configuration because the pilots had been set up for failure. They realised that telling them to be more careful, to pay more attention, in effect, where the management would try to ‘fix’ the pilot, wasn’t going to make much difference. Even when they punished the pilots, the problems continued after a period of time. Because the system wasn’t changed, eventually the fear of punishment subsided, and the errors returned. If you fire a ‘bad apple’ without addressing systemic issues, the ‘bad apples’ will return.

Cash Machines

A more recent example of addressing the system and not asking customers to be more careful. Cash machines/ATMs used to give the customer the cash first and the card second. The banks realised that errors were occurring because the customer was goal-orientated - get cash, go shopping/drinking - and once they had their cash, they walked off leaving their card in the machine.

This cost the banks money in replacement cards, but more importantly, it meant that someone else could take the card and use it for fraudulent transactions. To address this, the banks changed the order by which cash and card were dispensed, now the card was returned first and then the cash. This meant that the customer would only lose their cash and not their card and potentially more money. They also made the machine beep to bring the attention of the customer to the cash being presented to them.

The 'causes' of diving accidents

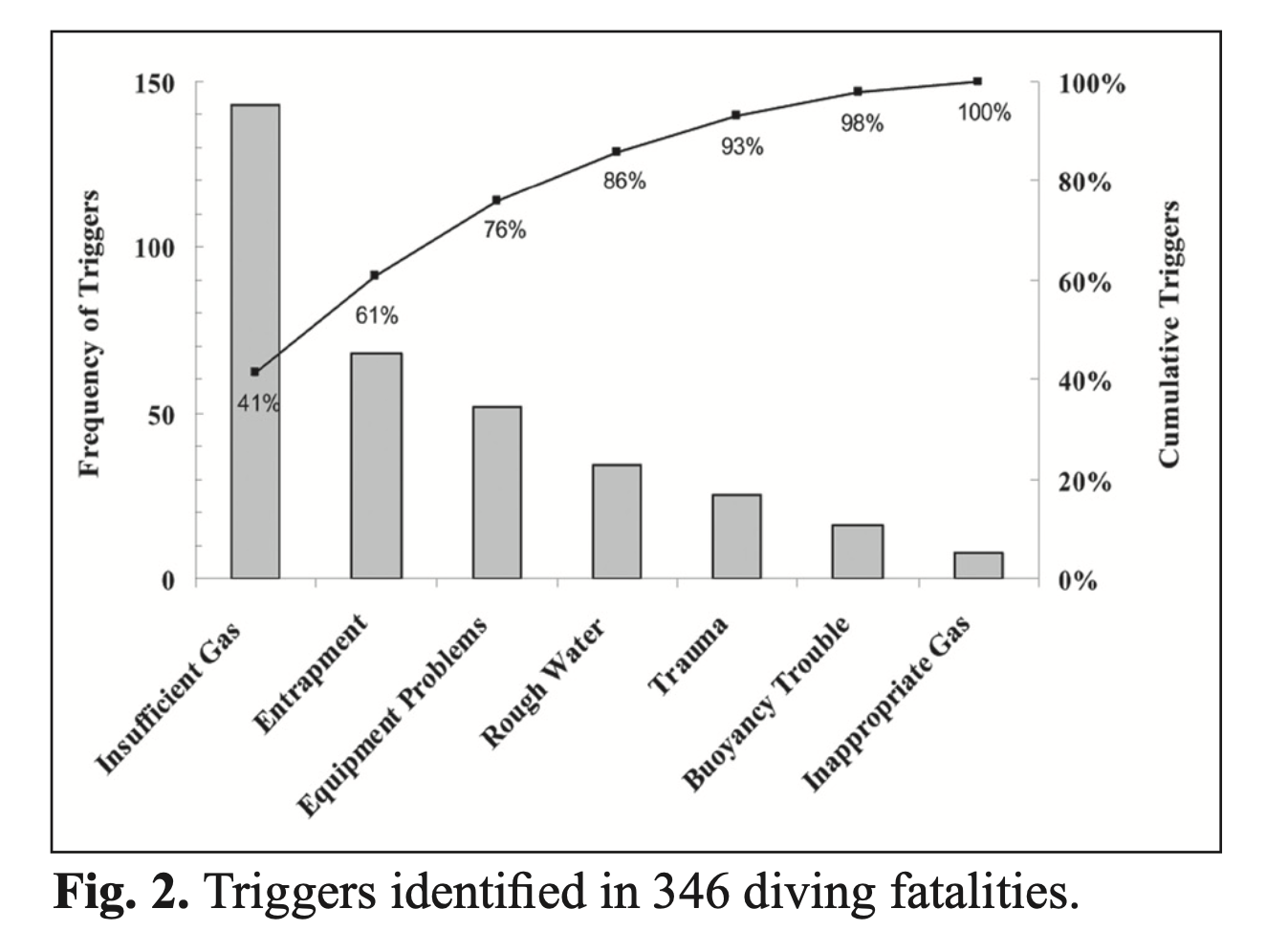

In the world of diving, we appear to have the same failures and accidents again and again. For example, if we look at the data from Denoble et al, it showed that the trigger event for 346 diving fatalities was insufficient gas in 41% of cases, entrapment in 20% of cases and equipment problems in 15% of cases (image below). The problem with this research is that it was outcome-focussed. It didn’t address causal or contributory factors, and to be fair, this was because the raw data coming in didn’t have that level of detail (and still doesn't).

Furthermore, there isn’t an established investigation process based on safety science research that looks for human factors or systems thinking causal or contributory factors. This problem is similar to that of the annual diving incident report published by the British Sub-Aqua Club (BS-AC) in the UK. This data is categorised based on severity of outcome and not how the incident developed in the manner it did. e.g., an out of gas situation following a separation leading to a rapid ascent and DCS would be grouped under the DCS category. The analysis doesn’t look for error-producing conditions or causal/contributory factors, again because the reports submitted often don’t contain them and there are limited resources to follow up with those who submit the reports. Furthermore, because there isn’t a reporting culture, or an effective 'learning from unintended outcomes' culture, those many near misses that happen are not captured and the analysis is biased towards fatalities.

'Can't change the human condition'

Professor James Reason said, “You cannot change the human condition, but you can change the condition in which humans work.” What he was getting at was that the mistakes that the workers were making wasn’t because of their incompetence, it was primarily because they had been set up to fail by the system, even when they should have been taking responsibility for their actions. They had received inadequate training, had to use incomplete procedures, there was limited time to complete the task, they didn’t have effective supervision or coaching, they were rewarded for production over safety, they didn’t have enough experience for the task, or they made inadequate risk assessments because of a lack of knowledge. The safety science has shown that once you start to address the systemic reasons why adverse events occur, in effect moving the focus from the errant worker to the system, then safety and production is significantly improved.

This doesn’t mean that those involved in human factors and systems thinking have accepted that humans will make errors and therefore it is a lost cause. In fact, the opposite. Because there is a recognition that humans will fail, then systems are put in place to reduce the likelihood that latent issues are present or trapped more often so that when active failures do occur, they are not catastrophic. Examples of this include the use of effective checklists, two-way closed-loop communications, debriefs, or forcing functions (like USB keys or cash machines).

What is needed for an accident to happen?

Thinking about how accidents happen, there are certain conditions that are needed.

- Latent issues, especially error-producing conditions, which increase the chances that an error isn’t trapped by a diver or instructor,

- A hazardous environment i.e., something that can harm those involved, and,

- An active failure i.e., a slip, lapse, mistake or ‘violation’.

If we don’t have all these conditions together, then it doesn’t matter what happens really in terms of an active failure. For example, it isn’t catastrophic if a diver loses all their gas at depth, if they have our buddy next to them, they are well trained and can make a gas sharing ascent. However, if they run out of gas at depth, and weren’t aware of how far their buddy was away because they were busy taking photos, their ‘choice’ is to make a rapid ascent from depth, which unfortunately leads to DCS, and they end up paralysed. Looking back for context, as a buddy team, they weren’t trained in:

- diving as a mutually accountable team,

- completing a brief that explicitly stated what the goals would be and how they would be achieved,

- checking each other’s location regularly and understanding/building situation awareness,

- how to build a gas monitoring habit while diving at depth,

- how to debrief and learn from suboptimal dives because separation, a reduced awareness and low gas had occurred on previous dives too.

In terms of the surface situation, the boat team wasn’t well practiced in treating an emergency, the O2 equipment wasn’t serviceable and there wasn’t a helicopter able to lift the casualty given a reduced capacity due to financial cuts. All these factors after the event were also present but had never been ‘relevant’ before.

Make sure the focus is in the right place.

By only focussing on the individual diver and their inherent fallibility, we will fail to see how it made sense for that diver to do what they did, and if we fail to do that, we won’t be able to address the systemic issues because it is easy to say, ‘fix the diver’. We often hear, “the divers should take more responsibility”. How do you take more responsibility if you don’t understand that the risks you are facing are credible because your training hasn’t adequately covered them? Or recognising that the mindset or social environment isn’t conducive to recognising the risks involved.

Interestingly, much of the focus on diving safety has been on health-related issues. This is because they often lead to fatal events given the hazardous environment in which diving takes place. It is also because those organisations involved in diving safety, like DAN, have a strong bias towards health-related factors. If healthcare is a continual issue, how do we address this from a systems point of view?

Firstly, recognise that diving is not suitable for all. While you might be able to float in the water if you are heavily overweight and unfit, are you able to conduct a self-rescue, rescue someone else, or be rescued yourself if something doesn’t go to plan? Planning for optimum conditions is not taking a systems view.

Sometimes decisions are taken by the ‘system’ regarding safety and rules are imposed on others. In individualistic cultures (like the US), this approach doesn’t go down well. However, humans have been shown time and time again to not be good decision-makers when they have incomplete information or when there are commercial pressures competing for safety. They have also been shown to be poor assessors of risk when the activity is voluntary, where they “will accept risks from voluntary activities (such as skiing) that are roughly 1000 times as great as it would tolerate from involuntary hazards that provide the same level of benefits.” (Slovic, 1987). I have noticed a certain irony when a serious accident happens. “They should have followed the rules, and then they wouldn’t have ended up dead.” and then when they are asked if they follow those same rules 100% of the time, the answer is often no. The rules apply to others, not them!

This isn’t to say that government regulation is the answer, as there is already some level of oversight in place. What could be improved is the level of knowledge and experience that divers and diving instructors get, which isn't necessarily the direct responsibility of the agency but the following is. There is a need by which competence is practically assessed on an ongoing, regular basis, especially for instructors. If instructor trainers are teaching something suboptimal, then instructors are learning this suboptimal skill, which is then passed on to the students on their course. Teaching incorrect information to students doesn’t have to come from instructor trainers/course directors, instructors will naturally drift and unless it is picked up through active monitoring, it remains hidden. This lack of competence isn’t normally an issue, until the point when the error-producing conditions and hazardous conditions are present, and the active failure (slip, lapse or mistake) occurs. At this point, the accident happens and the dots in the pattern are now visible. As external observers, we can easily work backwards in time stating that this was ‘obvious’ it was going to happen. The thing is, those same latent issues were present before the accident happened, but no-one went looking for them, or potentially more importantly, they didn’t know what to go looking for or didn’t want to go looking for them for commercial/legal reasons.

‘Fixing’ the diver or instructor is never going to solve systemic issues. To address these systemic issues, the investigation process for any adverse event or near miss must look further back than the individual and look to understand ‘local rationality’ – how did it make sense ‘locally’ for that diver to do what they did? Stopping at ‘human error’ or ‘diver error’ means that you either didn’t want to go looking further back to understand the contributory factors or that you didn’t have the skills or knowledge to ask the curious question ‘How did it make sense?’ If all you have is a hammer, the problems that need to be solved just look like nails.

Over the next few weeks, I will be putting materials in the public domain which will help divers, instructors, organisations, and agencies ask those curious questions in a structured manner. At an organisational level, agencies might not want to ask these questions for legal discovery reasons, but there will be those who want to learn how to move from ‘fixing’ to learning. We can only get better if we learn about the system, not just focus on human fallibility.

Gareth Lock is the owner of The Human Diver, a niche company focused on educating and developing divers, instructors and related teams to be high-performing. If you'd like to deepen your diving experience, consider taking the online introduction course which will change your attitude towards diving because safety is your perception, visit the website.

Want to learn more about this article or have questions? Contact us.